مدرس المادة

Hebbian Learning

Hebbian Learning Rule, also known as Hebb Learning Rule, was proposed by Donald O Hebb. It is one of the first and also easiest learning rules in the neural network. It is used for pattern classification. It is a single layer neural network, i.e. it has one input layer and one output layer. The input layer can have many units, say n. The output layer only has one unit. Hebbian rule works by updating the weights between neurons in the neural network for each training sample.

Hebbian Learning Rule Algorithm :

- Set all weights to zero, wi = 0 for i=1 to n, and bias to zero.

- For each input vector, S(input vector) : t(target output pair), repeat steps 3-5.

- Set activations for input units with the input vector Xi = Si for i = 1 to n.

- Set the corresponding output value to the output neuron, i.e. y = t.

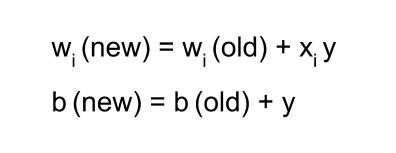

- Update weight and bias by applying Hebb rule for all i = 1 to n:

تحميل الملف